Generating 1 Million PDFs in 10 Minutes with Serverless Rust

Building a PDF rendering pipeline that scales from zero to infinity on a tight budget.

In the last year, I saw two companies struggling with generating documents in the form of PDFs. Both old, with a legacy tech stack that makes every new grad software dev wince.

One of these companies in the finance industry was forced to change as their new system couldn’t keep up with growing demands. While the old system was on-prem on servers in their basement, the new system was to be deployed in the cloud on AWS Lambda to reap the benefits of “infinite scaling” (or so they were promised). The project was supposed to be finished and go live in a matter of weeks—or at least that’s what management thought.

More than half a year later, with 5 full-time engineers on the project, the legacy system is still running full-time, with no end in sight.

I always found this project interesting. On the one hand due to its initial simplicity task to just render a pdf, but also because of the performance requirement that came along. After I heard how inefficient the new system is being implemented I was just thinking to myself “this can be implemented so much more efficiently”. And so I did.

This is the result—a guide on how to implement a high-performant & cost-efficient PDF rendering pipeline and deploy it.

If you are interested about the tech stack before you continue, here it is:

Rust, Terraform, AWS [SQS, S3, Lambda, API Gateway]

Huge shoutout to Typst, the underlying PDF typesetting engine for making this project possible.

You can find the code for the whole project on github: papermake-aws

So let’s get going 🚴🏻

Making millions in minutes, why?

In the financial industry, generating personalized reports at scale is a common challenge. Quarterly statements, tax documents, and trade confirmations often need to be generated for millions of customers within tight time windows. Waiting days for your trade confirmation is not only extremely inconvenient for customers but also not allowed by regulations. Eventually, customers will complain to the BaFin (German Federal Financial Supervisory Authority), and your company will get fined.

So let’s try to prevent these issues from happening.

Setting the stage

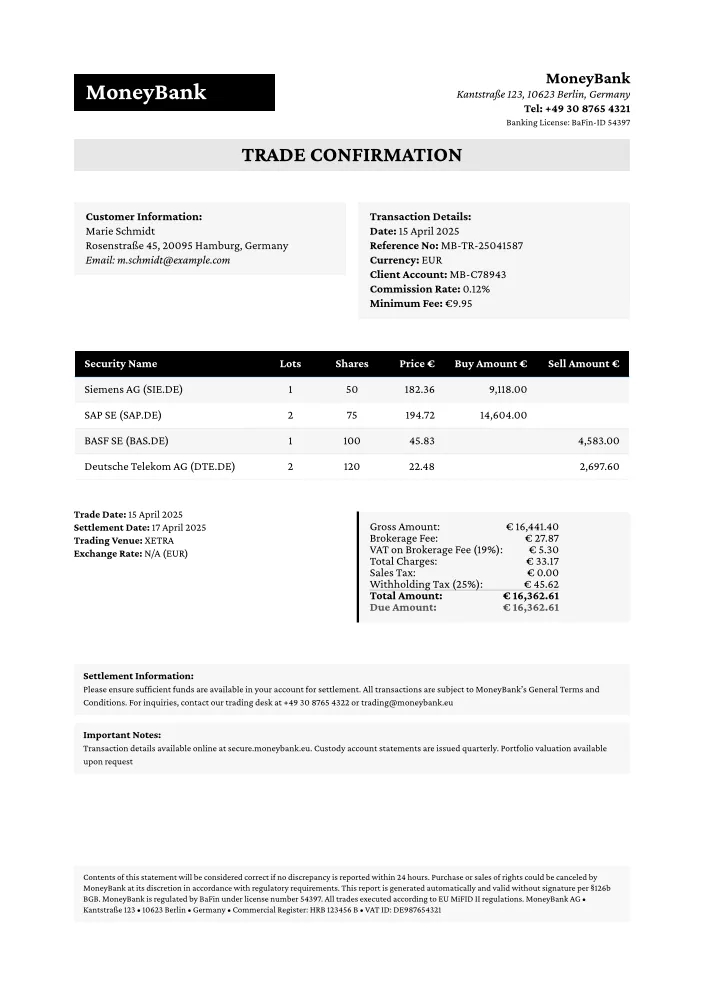

Our imaginary client, MoneyBank, needs to generate end-of-day trade confirmations for all transactions processed during market hours. With approximately 1 million trades per day, they need PDF generation to complete within minutes after market close (we will target 10 minutes because that’s sounds good). Preferably the infrastructure is cost-effective and scales with demand.

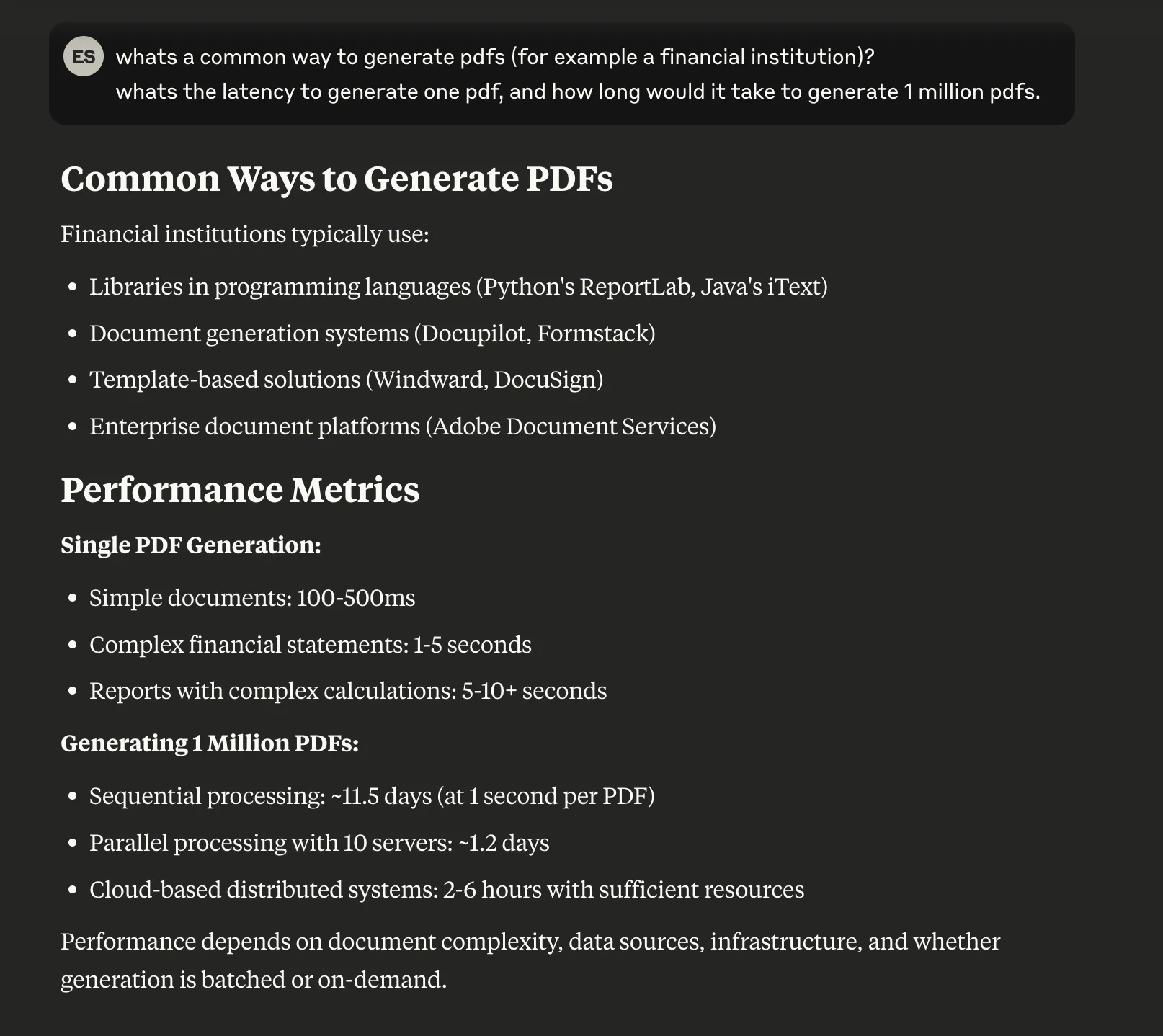

Just to make the point clear: generating 1 million PDFs in 10 minutes is no joke. That’s 1,667 PDFs per second, or ~0.6ms per PDF. With common PDF generators that take around 1 second each, we would need 11.5 compute days. Or 10 minutes times 1667 vCPUs—assuming they scale nicely.

Using EC2 On-Demand c6i.32xlarge instances (128 vCPUs each):

- Need 13 instances

- Cost: 6.208€ per hour per instance (eu-central-1)

- Total: 13,45€ for 10 Minutes

We are going to shave that down to 0.35€, reducing the cost by 97%. But let’s start with the foundation.

Architecture Decisions

There are many technical architectures to make this happen. As mentioned in the introduction we will base our solution upon AWS Lambda, not because I think this is the easiest or most cost-efficient way, but to give an example how it could be done. Having decided on that the rest of the puzzle pieces come naturally.

The system consists of:

- API Gateway: Entry-point to our rendering service.

- SQS: Managing rendering jobs.

- Lambda: Coordinating incoming requests as well as doing the actual PDF rendering.

- S3: Holding our document templates and the final PDFs.

New Rendering Technology

You saw earlier that Claude estimated the latency of rendering a PDF at around ~1 second, or 100ms at best. Since I have neither the intention nor the budget to compensate for this slow performance with massive computational resources, we need a faster way.

Throughout my career, I’ve seen multiple PDF rendering implementations in companies. All of them would be too slow for our goal:

- Puppeteer: ~1-2sec, due to headless browser startup overhead.

- Crystal Reports: ~750-900ms, even worse has legacy windows dependencies (I wouldn’t touch this shit again).

- LaTeX: ~500-800ms for compilation plus rendering.

You can get LaTeX a bit faster, but the bigger issue is that LaTeX comes with a huge compilation package and can be rather memory-hungry. These aren’t the best attributes for deploying to AWS Lambda.

The big benefit of using an actual typesetter like LaTeX over Crystal Reports is that your documents will always be laid out correctly. In Crystal Reports, you move around boxes with a fixed size where your data will be placed. If your customer’s name is longer than the box, it will simply be cut off.

Three years ago, I would have chosen LaTeX myself to build such a PDF rendering service, but since then we’ve gotten Typst—a very promising new typesetting system that is both fast and provides very helpful error messages.

I started working on Papermake, a PDF rendering library that makes use of Typst, and extends it with data based rendering and schema validation, and this guide makes use of it.

Creating the template

To begin with we have to design our template. I quickly designed a trade confirmation for our imaginary MoneyBank

The template definition looks as follows

---id: trade_confirmation---#let trade_confirmation(data) = { // Set document properties set document(title: "Trade Confirmation", author: data.company.name) set page( paper: "a4", margin: 2.2cm189 collapsed lines

)

// Typography settings set text(font: "Crimson Pro", size: 10pt) show heading: set text(font: "Source Sans Pro", weight: "bold")

// Header with company information grid( columns: (auto, 1fr), gutter: 1em, if data.company.logo != none { image(data.company.logo, width: 4cm) } else { box( width: 6cm, inset: 10pt, fill: rgb("#000000"), text(weight: "bold", size: 20pt, fill: white)[#data.company.name] ) }, align(right)[ #text(weight: "bold", size: 14pt)[#data.company.name] \ #text(style: "italic")[#data.company.address] \ #text(weight: "bold")[Tel: #data.company.phone] \ #text(size: 8pt)[Banking License: BaFin-ID 54397] ] )

// Title box( width: 100%, inset: (y: 8pt), fill: rgb("#000").lighten(90%), align(center)[ #text(weight: "bold", size: 18pt)[TRADE CONFIRMATION] ] )

v(0.5cm)

// Customer information grid( columns: (1fr, 1fr), gutter: 1em, box( width: 100%, inset: 10pt, fill: rgb("#f5f5f5"), [ #text(weight: "bold")[Customer Information:] \ #data.customer.name \ #data.customer.address \ #text(style: "italic")[Email: #data.customer.email] ] ), box( width: 100%, inset: 10pt, fill: rgb("#f5f5f5"), [ #text(weight: "bold")[Transaction Details:] \ *Date:* #data.transaction.date \ *Reference No:* #data.transaction.reference \ *Currency:* #data.transaction.currency \ *Client Account:* #data.transaction.client_code \ *Commission Rate:* #data.transaction.commission_percent% \ *Minimum Fee:* €#data.transaction.minimum_fee ] ) )

v(0.5cm)

// Transaction Details Table box( width: 100%, fill: white, inset: 1pt, [ #table( columns: (3fr, 1fr, 1fr, 1fr, 1.5fr, 1.5fr), inset: 8pt, align: (left, center, center, right, right, right), fill: (col, row) => if row == 0 { rgb("#000") } else if calc.odd(row) { rgb("#f5f5f5") } else { white }, stroke: (x, y) => ( if y == 0 { (bottom: 0.5pt + rgb("#0a3d62")) } else { (bottom: 0.2pt + rgb("#cccccc")) } ),

[#text(fill: white, weight: "bold")[Security Name]], [#text(fill: white, weight: "bold")[Lots]], [#text(fill: white, weight: "bold")[Shares]], [#text(fill: white, weight: "bold")[Price €]], [#text(fill: white, weight: "bold")[Buy Amount €]], [#text(fill: white, weight: "bold")[Sell Amount €]],

..data.details.flatten().map(row => ( [#row.stock], [#row.lots], [#row.shares], [#row.price], [#row.buy_amount], [#row.sell_amount] )).flatten(), ) ] )

v(0.5cm)

// Transaction Summary grid( columns: (1fr, 1fr), gutter: 1em, [ #text(size: 9pt)[ #text(weight: "bold")[Trade Date:] #data.transaction.date \ #text(weight: "bold")[Settlement Date:] 17 April 2025 \ #text(weight: "bold")[Trading Venue:] XETRA \ #text(weight: "bold")[Exchange Rate:] N/A (EUR) ] ], box( width: 100%, fill: rgb("#f5f5f5"), stroke: (left: 2pt + rgb("#000")), radius: (right: 4pt), inset: (x: 10pt, y: 8pt), [ #table( columns: (auto, auto), inset: (left: 0pt, right: 0pt, top: 2pt, bottom: 2pt), align: (left, right), stroke: none,

[Gross Amount:], [€ #data.summary.gross_amount], [Brokerage Fee:], [€ #data.summary.brokerage_fee], [VAT on Brokerage Fee (19%):], [€ #data.summary.vat_brokerage_fee], [Total Charges:], [€ #data.summary.total_charges], [Sales Tax:], [€ #data.summary.sales_tax], [Withholding Tax (25%):], [€ #data.summary.withholding_tax], table.hline(stroke: 0.5pt + gray), [#text(weight: "bold")[Total Amount:]], [#text(weight: "bold")[€ #data.total_amount]], [#text(weight: "bold", fill: rgb("#555"))[Due Amount:]], [#text(weight: "bold", fill: rgb("#555"))[€ #data.due_amount]], ) ] ) )

v(0.8cm)

box( width: 100%, fill: rgb("#f5f5f5").lighten(20%), inset: 8pt, [ #text(size: 9pt, weight: "bold")[Settlement Information:] \ #text(size: 8pt)[ Please ensure sufficient funds are available in your account for settlement. All transactions are subject to MoneyBank's General Terms and Conditions. For inquiries, contact our trading desk at +49 30 8765 4322 or trading\@moneybank.eu ] ] )

box( width: 100%, fill: rgb("#f5f5f5").lighten(20%), inset: 8pt, [ #text(size: 9pt, weight: "bold")[Important Notes:] \ #text(size: 8pt)[ Transaction details available online at secure.moneybank.eu. Custody account statements are issued quarterly. Portfolio valuation available upon request ] ] )

v(1fr)

// Disclaimer box( width: 100%, inset: 8pt, fill: rgb("#f8f8f8"), radius: 2pt, text(size: 7.5pt)[ Contents of this statement will be considered correct if no discrepancy is reported within 24 hours. Purchase or sales of rights could be canceled by MoneyBank at its discretion in accordance with regulatory requirements. This report is generated automatically and valid without signature per §126b BGB. MoneyBank is regulated by BaFin under license number 54397. All trades executed according to EU MiFID II regulations. MoneyBank AG • Kantstraße 123 • 10623 Berlin • Germany • Commercial Register: HRB 123456 B • VAT ID: DE987654321 ] )}

#let data = json.decode(sys.inputs.data)#trade_confirmation(data)Two things to notice

- The template definition is split into frontmatter that Papermake expects with some metadata and the typst markdown

- Notice the usage of variables like

#data.customer.name. This will be interpolated by Papermake with the data we are providing it in the request.

For example the document above is rendered with the data

{ "company": { "logo": null, "name": "MoneyBank", "address": "Kantstraße 123, 10623 Berlin, Germany", "phone": "+49 30 8765 4321" }, "customer": { "name": "Anneliese Süßebier", "address": "Rochus-Klingelhöfer-Platz 6, 95807 Tuttlingen, Germany", "email": "a.süßebier@hotmail.de" }, "transaction": { "date": "05 April 2025", "reference": "MB-TR-25040562", "currency": "EUR", "client_code": "MB-C74985", "commission_percent": "0.19", "minimum_fee": "8.27"33 collapsed lines

}, "details": [ { "stock": "BASF SE (BAS.DE)", "lots": "2", "shares": "75", "price": "53,71", "buy_amount": "4.028,58", "sell_amount": "" }, { "stock": "Siemens AG (SIE.DE)", "lots": "3", "shares": "125", "price": "177,08", "buy_amount": "", "sell_amount": "22.134,51" }, { "stock": "Daimler AG (DAI.DE)", "lots": "3", "shares": "75", "price": "76,17", "buy_amount": "5.712,79", "sell_amount": "" }, { "stock": "Allianz SE (ALV.DE)", "lots": "1", "shares": "125", "price": "228,33", "buy_amount": "28.541,83", "sell_amount": "" } ], "summary": { "gross_amount": "-16.148,68", "brokerage_fee": "30,68", "vat_brokerage_fee": "5,83", "total_charges": "36,51", "sales_tax": "0,00", "withholding_tax": "0,00" }, "total_amount": "-16.185,20", "due_amount": "-16.185,20"}Papermake allows for schema validation, ensuring all required data fields are present, which is definitely advisable in production, but I am going to omit that here.

Implementing our two lambda functions

We implement both of our lambdas in Rust using cargo-lambda. Rust compiles to a native binary so there are no dependencies to a runtime and no coldstart wait times with for example JVM.

The following code examples are not complete. I only included the main logic, that I wanted to highlight. You can find the complete source code on github.

Request handler

The request-handler lambda has only one job: Receive a request from API Gateway with a batch of render definitions and pass them to SQS.

use aws_lambda_events::{apigw::{ApiGatewayProxyRequest, ApiGatewayProxyResponse}, encodings::Body};use serde::{Deserialize, Serialize};use serde_json::json;use uuid::Uuid;use lambda_runtime::{service_fn, LambdaEvent, Error, run};

#[derive(Deserialize)]struct RenderRequest { template_id: String, data: serde_json::Value,}

#[derive(Serialize)]struct RenderJob { job_id: String, template_id: String, data: serde_json::Value,}

#[tokio::main]async fn main() -> Result<(), Error> { tracing_subscriber::fmt() .with_ansi(false) .without_time() .with_max_level(tracing::Level::INFO) .init();

run(service_fn(function_handler)).await}

async fn function_handler(event: LambdaEvent<ApiGatewayProxyRequest>) -> Result<ApiGatewayProxyResponse, Error> { // Parse request let body = event.payload.body.unwrap(); let request: RenderRequest = serde_json::from_str(body.as_str())?;

let queue_url = std::env::var("QUEUE_URL").expect("QUEUE_URL must be set");

// Generate job ID let job_id = Uuid::new_v4().to_string();

// Create job and send to SQS let job = RenderJob { job_id: job_id.clone(), template_id: request.template_id.clone(), data: request.data.clone(), };

let config = aws_config::load_from_env().await; let sqs_client = aws_sdk_sqs::Client::new(&config);

// Send to SQS and return immediately sqs_client.send_message() .queue_url(&queue_url) .message_body(serde_json::to_string(&job)?) .send() .await?;

// Return job ID immediately Ok(ApiGatewayProxyResponse { status_code: 202, // Accepted body: Some(Body::Text(json!({"job_id": job_id, "status": "queued"}).to_string())), is_base64_encoded: false, ..Default::default() })}I had a serialization error of the LambdaEvent and couldn’t figure that out for hours. Turns out you should keep your crates, especially aws_lambda_events up to date.

Renderer

The Lambda function to render PDF first get’s the render from SQS, renders the PDF using papermake and then uploads the PDF to S3

use aws_lambda_events::sqs::SqsEvent;use lambda_runtime::{run, service_fn, Error, LambdaEvent};use serde::{Deserialize, Serialize};use std::env;use thiserror::Error;

#[derive(Debug, Deserialize, Serialize)]struct RenderJob { job_id: String, template_id: String, data: serde_json::Value,}

#[derive(Error, Debug)]pub enum RenderError { #[error("Failed to parse job: {0}")] JobParseError(String), #[error("Failed to render PDF: {0}")] RenderingError(String), #[error("S3 operation failed: {0}")] S3Error(String), #[error("Environment variable not found: {0}")] EnvVarError(String),}

async fn function_handler(event: LambdaEvent<SqsEvent>) -> Result<(), Error> { let templates_bucket = env::var("TEMPLATES_BUCKET") .map_err(|_| RenderError::EnvVarError("TEMPLATES_BUCKET".to_string()))?; let results_bucket = env::var("RESULTS_BUCKET") .map_err(|_| RenderError::EnvVarError("RESULTS_BUCKET".to_string()))?;

// Create S3 client let config = aws_config::load_from_env().await; let s3_client = aws_sdk_s3::Client::new(&config);

// Process each message from SQS for record in event.payload.records { let message_body = record.body.as_ref() .ok_or_else(|| RenderError::JobParseError("Empty message body".to_string()))?;

// Parse the job from the message let job: RenderJob = match serde_json::from_str(message_body) { Ok(job) => job, Err(e) => { eprintln!("Failed to parse job: {}", e); continue; // Skip this message and move to the next one } };

println!("Processing job {}: template={}", job.job_id, job.template_id);

// Get template from S3 let template_result = s3_client .get_object() .bucket(&templates_bucket) .key(&job.template_id) .send() .await;

let template = match template_result { Ok(t) => t, Err(e) => { eprintln!("Failed to fetch template {}: {}", job.template_id, e); continue; } };

let template_data = match template.body.collect().await { Ok(data) => data.to_vec(), Err(e) => { eprintln!("Failed to read template data: {}", e); continue; } };

// Render PDF using papermake let render_result = match render_pdf( &job.template_id, &template_data.as_slice(), &job.data, ) { Ok(result) => result, Err(e) => { eprintln!("Rendering error: {}", e); continue; } };

if let None = render_result.pdf { eprintln!("Rendering result is None for job {}", job.job_id); continue; }

let pdf = render_result.pdf.unwrap();

// Upload PDF to S3 match s3_client .put_object() .bucket(&results_bucket) .key(format!("{}.pdf", job.job_id)) .body(pdf.into()) .send() .await { Ok(_) => println!("Successfully uploaded PDF for job {}", job.job_id), Err(e) => eprintln!("Failed to upload PDF for job {}: {}", job.job_id, e), } }

// Return OK to acknowledge processing of all messages Ok(())}

#[tokio::main]async fn main() -> Result<(), Error> { tracing_subscriber::fmt() .with_ansi(false) .without_time() .with_max_level(tracing::Level::INFO) .init();

run(service_fn(function_handler)).await}

// Helper function to render PDF using papermakefn render_pdf( id: &str, template_data: &[u8], data: &serde_json::Value,) -> Result<papermake::render::RenderResult, Box<dyn std::error::Error>> { // Initialize papermake renderer let template_data = String::from_utf8(template_data.to_vec())?; let template = papermake::Template::from_file_content(id, &template_data)?;

// Render PDF let result = papermake::render_pdf(&template, data, None)?;

Ok(result)}Both lambdas are compiled to arm64 using the release flag and then zipped.

cargo lambda build --release --arm64Don’t forget to add the needed fonts to the pdf-renderer.

Terraform definition

Having the whole stack as IaC is a pure blessing. Not only did it allow me to iterate rather quickly but I can also easily tear the whole infrastructure down on a single click—without leaving a mess of things in AWS that eat up your budget.

Let’s look at the terraform definition that creates all the needed infrastructure. The terraform module takes care of creating the S3 buckets, SQS queue, Lambda functions and API Gateway.

module "pdf_renderer" { source = "../../modules/pdf_renderer"

environment = "dev" project_name = "papermake-pdf"

# S3 bucket configurations templates_bucket_name = "papermake-templates-dev" results_bucket_name = "papermake-results-dev"

# SQS queue configuration queue_name = "pdf-render-queue-dev"

# Lambda configurations render_lambda_memory = 256 # can be increased if needed render_lambda_timeout = 300 # 5 minutes

# API Gateway configuration api_name = "pdf-renderer-api-dev" api_stage = "v1"}The Lambda function uses a custom runtime based on Amazon Linux 2023 and runs on ARM64 architecture for better cost efficiency

locals { common_tags = { Environment = var.environment Project = var.project_name ManagedBy = "terraform" }}

# S3 Bucketsresource "aws_s3_bucket" "templates" { bucket = var.templates_bucket_name tags = local.common_tags}

resource "aws_s3_bucket" "results" { bucket = var.results_bucket_name tags = local.common_tags}

# SQS Queueresource "aws_sqs_queue" "render_queue" { name = var.queue_name visibility_timeout_seconds = 900 # 15 minutes message_retention_seconds = 1209600 # 14 days tags = local.common_tags}

# Request Handler Lambda Functionresource "aws_lambda_function" "request_handler" { filename = "../../../lambda_functions/request_handler/pdf_request_handler.zip" function_name = "${var.project_name}-request-handler-${var.environment}" role = aws_iam_role.request_handler_role.arn handler = "bootstrap" architectures = ["arm64"] runtime = "provided.al2023" memory_size = var.request_handler_memory timeout = var.request_handler_timeout source_code_hash = filebase64sha256("../../../lambda_functions/request_handler/pdf_request_handler.zip")

environment { variables = { QUEUE_URL = aws_sqs_queue.render_queue.url } }

tags = local.common_tags}

# Renderer Lambda Functionresource "aws_lambda_function" "renderer" { filename = "../../../lambda_functions/renderer/pdf_renderer.zip" function_name = "${var.project_name}-renderer-${var.environment}" role = aws_iam_role.renderer_role.arn handler = "bootstrap" architectures = ["arm64"] runtime = "provided.al2023" memory_size = var.renderer_memory timeout = var.renderer_timeout source_code_hash = filebase64sha256("../../../lambda_functions/renderer/pdf_renderer.zip")

environment { variables = { TEMPLATES_BUCKET = aws_s3_bucket.templates.id RESULTS_BUCKET = aws_s3_bucket.results.id FONTS_DIR = "fonts" } }

tags = local.common_tags}

# API Gatewayresource "aws_apigatewayv2_api" "main" { name = var.api_name protocol_type = "HTTP" description = "PDF Renderer API"}

resource "aws_apigatewayv2_stage" "main" { api_id = aws_apigatewayv2_api.main.id name = var.api_stage auto_deploy = true}

# API Gateway Integration with Request Handler Lambdaresource "aws_apigatewayv2_integration" "request_handler" { api_id = aws_apigatewayv2_api.main.id integration_type = "AWS_PROXY"

connection_type = "INTERNET" description = "Request Handler Lambda integration" integration_method = "POST" integration_uri = aws_lambda_function.request_handler.invoke_arn}

# API Gateway Routeresource "aws_apigatewayv2_route" "render_pdf" { api_id = aws_apigatewayv2_api.main.id route_key = "POST /render" target = "integrations/${aws_apigatewayv2_integration.request_handler.id}"}

# Request Handler Lambda Permission for API Gatewayresource "aws_lambda_permission" "api_gw" { statement_id = "AllowAPIGatewayInvoke" action = "lambda:InvokeFunction" function_name = aws_lambda_function.request_handler.function_name principal = "apigateway.amazonaws.com" source_arn = "${aws_apigatewayv2_api.main.execution_arn}/*/*"}

# Lambda Event Source Mapping for Rendererresource "aws_lambda_event_source_mapping" "sqs_trigger" { event_source_arn = aws_sqs_queue.render_queue.arn function_name = aws_lambda_function.renderer.arn batch_size = 1}After deploying the whole stack using terraform apply we can test if with a sample request:

curl --request POST \ --url https://xxxxxxxxx.execute-api.eu-central-1.amazonaws.com/v1/render \ --header 'Content-Type: application/json' \ --data '{ "jobs": [ { "template_id": "trade_confirmation", "data": { "company": { "logo": null, "name": "MoneyBank 2", "address": "Kantstraße 123, 10623 Berlin, Germany", "phone": "+49 30 8765 4321" }, ... "summary": { "gross_amount": "16,441.40", "brokerage_fee": "27.87", "vat_brokerage_fee": "5.30", "total_charges": "33.17", "sales_tax": "0.00", "withholding_tax": "45.62" }, "total_amount": "16,362.61", "due_amount": "16,362.61" } } ]}'Getting back

HTTP/1.1 202 AcceptedApigw-Requestid: Jal4uiuOFiAEJ7Q=Connection: closeContent-Length: 70Content-Type: text/plain; charset=utf-8Date: Tue, 22 Apr 2025 08:15:52 GMT

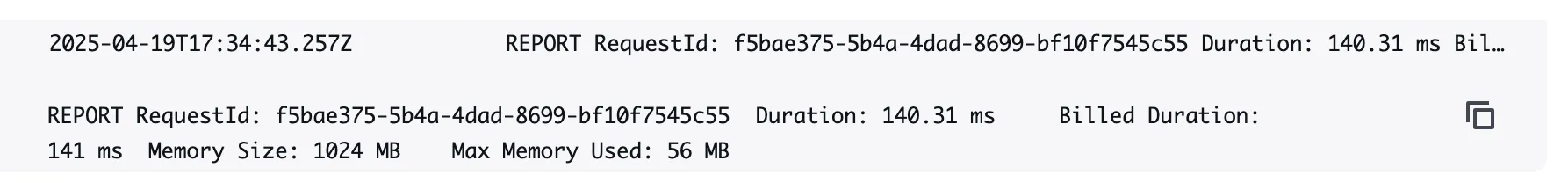

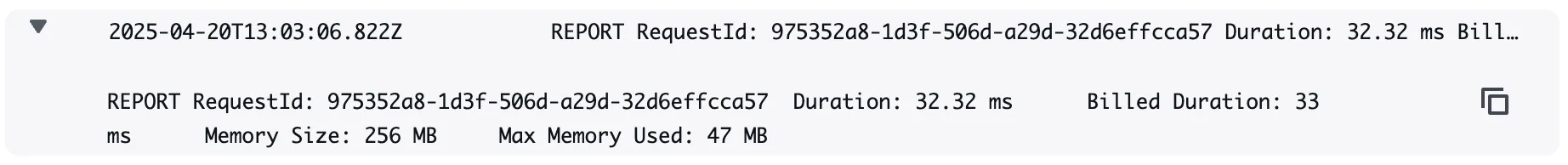

{"job_ids":["556393ce-74c6-4115-87d7-ed9ad6856d2a"],"status":"queued"}Looking into the logs of our rendere we see the whole lambda with fetching the template and rendering it took 141ms. Not bad, but also not good enough. We still have some work to do.

Performance Tuning

To achieve our goal of 1 million PDFs in under 10 minutes without breaking the bank, we needed to optimize several aspects of the system:

1. Lambda Concurrency

AWS Lambda has default concurrency limits that need to be increased. I deployed all of this on a brand new AWS account, so I had a limit of only 10 unreserved concurrent invocations. But a performance-ready configuration would look like this:

# Auto-scaling for the renderer Lambda based on SQS queue metricsresource "aws_appautoscaling_target" "lambda_target" { max_capacity = 1000 # Maximum number of concurrent Lambda instances min_capacity = 5 # Minimum number of concurrent Lambda instances resource_id = "function:${aws_lambda_function.renderer.function_name}:${aws_lambda_function.renderer.version}" scalable_dimension = "lambda:function:ProvisionedConcurrency" service_namespace = "lambda"}

# Scale up policy - Add more provisioned concurrency when queue depth increasesresource "aws_appautoscaling_policy" "scale_up" { name = "scale-up-${var.environment}" policy_type = "TargetTrackingScaling" resource_id = aws_appautoscaling_target.lambda_target.resource_id scalable_dimension = aws_appautoscaling_target.lambda_target.scalable_dimension service_namespace = aws_appautoscaling_target.lambda_target.service_namespace

target_tracking_scaling_policy_configuration { predefined_metric_specification { predefined_metric_type = "LambdaProvisionedConcurrencyUtilization" }

target_value = 0.75 # Try to keep utilization around 75% scale_in_cooldown = 120 # Wait 2 minutes before scaling in scale_out_cooldown = 30 # Only wait 30 seconds before scaling out }}2. Caching

We already have very fast cold-starts due to Rust compiling to a native binary, but creating a connection to S3 or SQS still takes some double-digit milliseconds that we could cache between hot invocations.

Not only that, assuming we are rendering a large amount of the same template just with different data (as we are doing), we can also cache the template and can save on the roundtrip to S3. And now the final ingredient: We can also cache part of the compilation of the template (referred to as world caching in the code).

// Shared resources across invocations#[derive(Debug)]struct SharedResources { s3_client: aws_sdk_s3::Client, templates_bucket: String, results_bucket: String, template_cache: RwLock<HashMap<String, Vec<u8>>>, world_cache: RwLock<HashMap<String, Arc<Mutex<TypstWorld>>>>,}

static RESOURCES: OnceCell<Arc<SharedResources>> = OnceCell::const_new();

// Initialize resources asynchronouslyasync fn initialize_resources() -> Arc<SharedResources> { // Read environment variables let templates_bucket = env::var("TEMPLATES_BUCKET") .expect("TEMPLATES_BUCKET environment variable not set"); let results_bucket = env::var("RESULTS_BUCKET") .expect("RESULTS_BUCKET environment variable not set");

// Initialize AWS client let config = aws_config::load_from_env().await; let s3_client = aws_sdk_s3::Client::new(&config);

// Create and return resources Arc::new(SharedResources { s3_client, templates_bucket, results_bucket, template_cache: RwLock::new(HashMap::new()), world_cache: RwLock::new(HashMap::new()), })}3. Batching

The network latency from sending 1 million requests to API Gateway is already high, which would be a major bottleneck when performance testing this setup. Additionally, SQS has the ability to send batches of jobs to our rendering lambda.

// Group data files by template to reduce template loading overheadfn group_data_by_template(data_files: &[String]) -> HashMap<String, Vec<String>> { let mut groups = HashMap::new();

for data_file in data_files { // Extract template identifier from data filename pattern let template_key = extract_template_key(data_file); groups.entry(template_key).or_default().push(data_file.clone()); }

groups}Results

With all those improvements implemented, we get the following preliminary results:

- Requests: 1000

- Total processing time: 11 seconds

- Throughput: 91 PDFs / second

The total processing time is measured until all PDFs are uploaded to S3. Note, that this is still with only 10 unreserved concurrent invocations that both lambdas share.

We are still below our target of 1,667 PDFs/second, but this should easily scale to our target as soon as AWS increases my quota limit.

Knowing that our renderer takes roughly 35ms per PDF (hot-started, template cached, world cached), we would only need 60 concurrent invocations to get to 0.6ms per PDF.

Cost calculation

For 1 million Lambda invocations with:

- Memory: 256 MB (0.25 GB)

- Billed Duration: 35 ms per invocation

This results in:

- Total GB-seconds: 8,750

- Compute cost: $0.15

- Invocation cost: $0.20

- Total cost without free tier: $0.35

You could see this as a pessimistic calculation as we ignored the batching which would result in fewer invocations and the same GB-seconds. Pretty cheap. All the testing I did was still covered by the free tier. The first free tier quota that got exceeded was S3, with 2,000 requests (us saving PDFs).

Next Steps

Honestly I would love to battle test this with actually rendering 1 million PDFs, but for now I am waiting for the quota increase on concurrent invocations.

A real production PDF pipeline at a company would probably require additional bells and whistles like

- SQS routing based on template id, given you have many different templates to make use of caching without overwhelming it.

- Adding real-time monitoring, alerting for rendering failures, and retry logic

- Multi-region deployment for disaster recovery

- Implementing document signing and encryption layers

This project was meant to demonstrate the power of Rust-based PDF generation with a serverless architecture.

Want to build something similar? Check out the Papermake library I am working on, powered by the amazing Typst typesetting engine. You can also explore the complete source code for this implementation.